Unraveling Graph Counterfactual Explainability: from Theoretical Foundations to Technical Mastery

Tutorial at the the European Conference on Machine Learning and Principles and Practice of Knowledge Discovery in Databases (ECML-PKDD 2025)

Organisers

Dr. Bardh Prenkaj

Andrea D'Angelo

Efstratios Zaradoukas

Mario A. Prado-Romero

Prof. Giovanni Stilo

Slides can be downloaded TBA

Table of contents

- Abstract

- Duration

- Scope of the tutorial

- Prerequisites

- Outline & Contents

- Acknowledgement

- Meet the Speakers

- Bibliography

Highlights

The tutorial is based on ACM Computing Survey: A Survey on Graph Counterfactual Explanations: Definitions, Methods, Evaluation, and Research Challenges Use the following BibTeX to cite our paper.

@article{10.1145/3618105,

author = {Prado-Romero, Mario Alfonso and Prenkaj, Bardh and Stilo, Giovanni and Giannotti, Fosca},

title = {A Survey on Graph Counterfactual Explanations: Definitions, Methods, Evaluation, and Research Challenges},

year = {2023},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

issn = {0360-0300},

url = {https://doi.org/10.1145/3618105},

doi = {10.1145/3618105},

journal = {ACM Computing Surveys},

month = {sep}

}Abstract

Graph Neural Networks (GNNs) are transforming AI applications in bioinformatics, fraud detection, recommender systems, and social network analysis. However, their black-box nature limits adoption in high-stakes domains where transparency is essential. Graph Counterfactual Explainability (GCE) addresses this challenge by identifying minimal changes in graph structure or features that alter model predictions, providing interpretable and actionable insights. This tutorial equips attendees with the theoretical foundations and practical tools to understand, develop, and apply counterfactual explainability methods for GNNs. We begin with an overview of GNN architectures and the challenges of generating post-hoc explanations. Next, we define GCE, exploring its advantages over factual explanations and its role in algorithmic recourse. We present a structured taxonomy of counterfactual explainers, covering search-based, heuristic, and learning-based approaches. Additionally, we examine evaluation metrics, datasets, and benchmarking protocols to assess the effectiveness of counterfactual explanations. A key focus is counterfactual explainability in evolving graphs, where distribution shifts and temporal dependencies affect model reliability. We also highlight the importance of open-source frameworks such as GRETEL, which provide standardized tools for evaluating and deploying counterfactual explainers, accelerating research and real-world adoption. By the end of this tutorial, participants will be equipped to critically assess counterfactual explanations, leverage existing frameworks, and apply GCE techniques in dynamic graph settings.

Duration

The tutorial will be carried on for a half-day, spanning 4 hours, as the most suitable format to introduce attendees to counterfactual explanations on graphs and their foundational concepts. This duration strikes a balance between providing a comprehensive understanding of the topic and technical details to bootstrap their programming skills with GRETEL.

Scope of the tutorial

The ECML-PKDD community has shown growing interest in Explainable AI and Graph Neural Networks (GNNs). Graph Counterfactual Explainability (GCE) offers a compelling direction by revealing minimal changes in graph structure or features that flip model predictions—providing actionable, human-interpretable insights into black-box GNNs. This tutorial will introduce the foundations of GNNs and explainability methods, before diving into counterfactual reasoning, its advantages over factual explanations, and its role in algorithmic recourse. We will present a structured taxonomy of GCE techniques, cover evaluation metrics and benchmarking protocols, and explore real-world applications on molecular and dynamic graphs. Special attention will be given to evolving graphs and open-source tools like GRETEL, which standardize and accelerate the development of GCE methods.

The tutorial is aimed at researchers and practitioners in machine learning, data mining, and XAI. Participants will gain both conceptual understanding and practical tools to develop and evaluate counterfactual explanations in graph-based models.

Prerequisites

The tutorial is aimed at practitioners in academia and industry interested in applying machine learning techniques to analyze graph data.

Familiarity with basic ML concepts will be beneficial for thoroughly understanding the tutorial.

Outline & Contents

Counterfactual explanations shed light on the decision-making mechanisms and provide alternative scenarios that yield different outcomes, offering end users recourse—these distinctive characteristics position counterfactual explainability as a highly effective method in graph-based contexts. We adopt a multifaceted approach, organizing and examining GCE from different perspectives, such as method taxonomy, classification along various dimensions, detailed descriptions of individual works (including their evaluation processes), discussions on evaluation measures, and commonly used benchmark datasets. We provide a brief outline of the tutorial in Figure 1. Here, we comprehensively review counterfactual explainability in graphs and their importance with other (factual) explainability methods.

- Part I: Introduction and Background (20 mins)

- The rise of Graph Neural Networks (GNNs) in AI

- Fundamental principles: How do GNNs work?

- Applications across domains:

- Traffic Modeling

- Physical simulations

- Protein interaction networks

- Large-scale recommender systems

- Key challenges in understanding GNN predictions

- Part II: Explainability in Graphs (XAI) (30 mins)

- The black-box problem in GNNs and its implications

- Overview of Explainable AI (XAI) techniques for graphs

- Factual explanations vs. Counterfactual explanations

- A deep dive into existing factual explainers:

- GNNExplainer: Strengths and limitations

- GraphLIME: Local interpretability in GNNs

- Other noteworthy post-hoc explanation methods

- Part III: undamentals of Graph Counterfactual Explainability (GCE) (45 mins)

- Defining counterfactual explanations in the graph domain

- Why counterfactuals? Advantages over factual explanations

- Challenges in generating counterfactuals for graphs:

- Structural constraints in graphs

- Ensuring plausibility and feasibility of counterfactuals

- Computational complexity concerns

- Taxonomy of GCE methods:

- Instance-level explainers

- Search-based approaches

- Heuristic-based approaches

- Learning-based approaches

- Model-level explainers

- Global explanations for GNNs

- Instance-level explainers

- Part IV: Evaluating Graph Counterfactual Explanations (30 mins)

- Overview of benchmarking datasets:

- Synthetic vs. real-world graph datasets

- Domain-specific datasets: Social networks, molecular graphs, and financial transactions

- Evaluation metrics and assessment frameworks:

- Validity and plausibility of counterfactuals

- Proximity and sparsity constraints

- Fidelity to the underlying GNN model

- Discussion on robustness and fairness in GCE methods

- Overview of benchmarking datasets:

- Part V: Counterfactual Explainability in Evolving Graphs (40 mins)

- What makes dynamic graphs different?

- Temporal dependencies and evolving structures

- Distribution shifts and their impact on GNN predictions

- Challenges in applying counterfactual explanations to dynamic graphs:

- Stability and consistency of counterfactuals over time

- Identifying actionable interventions in dynamic environments

- Handling streaming data and online learning settings

- Existing approaches for counterfactual explainability in dynamic graphs:

- Adapting instance-based explainers to evolving graphs

- Extending model-level explanations for temporal settings

- Learning-based techniques for robust counterfactuals in dynamic graphs

- What makes dynamic graphs different?

- Part VI: Frameworks In-the-Wild (35 mins)

- Introduction to the challenges of developing and evaluating GCE methods

- GRETEL’s design, core components, and their interaction

- Basic explanation pipeline and evaluation

- Part VII: What's next? (10 mins)

- Open discussion on the future trends and development in the research/industry area

Figure 1: The brief outline of the tutorial with its time scheduling.

During the first part of the tutorial, we introduce GNNs [2] and their underlying message-passing mechanism to solve graph prediction problems. We will delve into the different types of GNNs and their application in real-world problems [3], such as protein-protein interaction, drug-target affinity prediction, and anomaly detection in social networks.

In the second part, we provide the reader with the challenges of deploying black-box models in critical scenarios and how post-hoc interpretability helps uncover “what is happening under the hood”. Here, we introduce factual explanations [4] and briefly revisit the most interesting methods in this category, namely GNNExplainer [5], and GraphLIME [6].

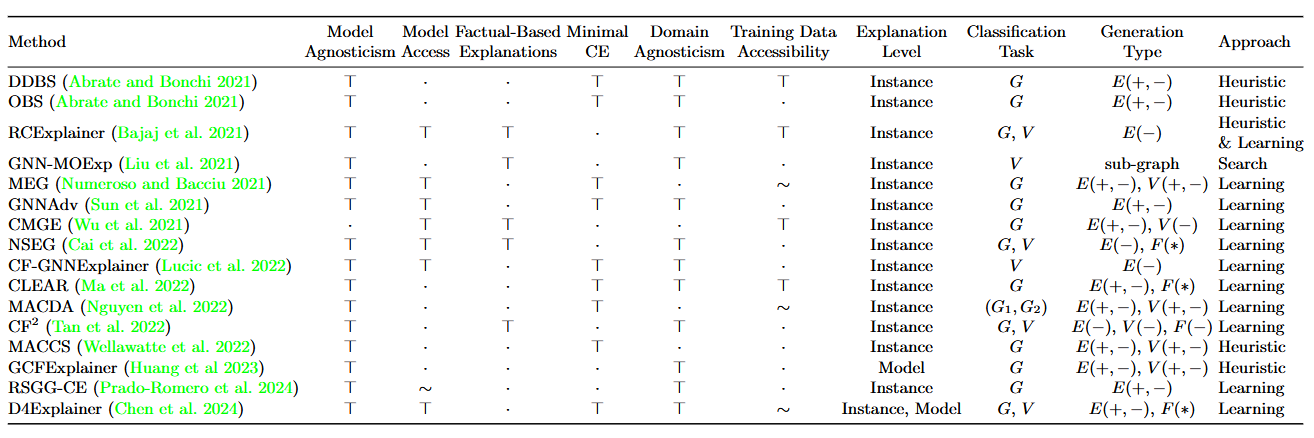

In the third part, we formalise the definition of Graph Counterfactual Explanation (GCE). We present a taxonomy of the most important references [1], categorised as in Table 1, including search-based explainers [7] , heuristic-based ones [8,9,10], learning-based ones [9,11,12,13,14,15,16,17,18], and global-level explanation methods [19]. Additionally, we list the most common benchmarking datasets and a set of evaluation metrics [4,20] necessary to assess the (dis)advantages of each counterfactual explainer in the literature.

Table 1: The most important references that will be covered in the tutorial.

Acknowledgement

“Graphs Counterfactual Explainability: A Comprehensive Landscape (Tutorial at AAAI 2024)” event was organised as part of the ICSC, Centro Nazionale di Ricerca in High Performance Computing, Big Data e Quantum Computing (Prot. CN00000013) initiatives aimed at disseminating to new communities the project results and creating bridging opportunities. ICSC, Centro Nazionale di Ricerca in High Performance Computing, Big Data e Quantum Computing receives funding from European Union – NextGenerationEU – National Recovery and Resilience Plan (Piano Nazionale di Ripresa e Resilienza, PNRR) – Project: CN00000013 – NATIONAL CENTRE FOR HPC, BIG DATA AND QUANTUM COMPUTING, Avviso pubblico D.D. n. 3138 del 16.12.2021, rettificato con D.D.3175 del 18.12.2021

Meet the Speakers

Bardh Prenkaj

is a postdoc at the Technical University of Munich, Chair of Responsible Data Science, moving from a first postdoc position (10/2022 - 09/2024) held at the Computer Science Department in the Sapienza University of Rome. His research interests lie on the intersection of Trustworthy Machine Learning and AI for Healthcare. Recently, he has been dedicated to understanding the trade-off between transparency and performance in tasks such as Graph Classification, Anomaly Detection, and Shortcut Identification.

Andrea D'Angelo

is a Ph.D. student in Computer Science at the University of L’Aquila, where he previously completed his Bachelor’s and Master’s degrees in 2019 and 2022, respectively. In 2025, he spent a visiting research period at Aarhus University in Denmark. His research focuses on Responsible Data Science, particularly Machine Unlearning, Information Retrieval, and Bias and Fairness.

Efstratios Zaradoukas

is a Ph.D. student at the Chair of Responsible Data Science, Technical University of Munich. He earned his Master’s degree in Electrical and Computer Engineering at University of Patras, Greece. He has experience in fields such as Image Captioning, Image Inpainting and Recommender Systems. His primary research focuses on explainability for autoregressive generative models, and machine unlearning.

Mario Alfonso Prado-Romero

obtained his PhD at the Gran Sasso Science Institute in Italy. His primary research focuses on the confluence of Explainable AI and Graph Neural Networks. Before this, he gained experience in relevant fields such as Anomaly Detection, Data Mining, and Information Retrieval. Notably, he is the key contributor to the GRETEL project, which offers a comprehensive framework for developing and assessing Graph Counterfactual Explanations. Additionally, he was selected by NEC Laboratories Europe as the only intern of the Human-Centric AI group in 2023, where his expertise in eXplainable Artificial Intelligence (XAI) will be applied to Graph Neural Networks for Biomedical Applications.

Giovanni Stilo

is a Computer Science and Data Science associate professor at the University of L’Aquila, where he leads the Master’s Degree in Data Science, and he is part of the Artificial Intelligence and Information Mining collective. He received his PhD in Computer Science in 2013, and in 2014, he was a visiting researcher at Yahoo! Labs in Barcelona. His research interests are related to machine learning, data mining, and artificial intelligence, with a special interest in (but not limited to) trustworthiness aspects such as Bias, Fairness, and Explainability. Specifically, he is the head of the GRETEL project devoted to empowering the research in the Graph Counterfactual Explainability field. He has co-organized a long series (2020-2023) of top-tier International events and Journal Special Issues focused on Bias and Fairness in Search and Recommendation. He serves on the editorial boards of IEEE, ACM, Springer, and Elsevier Journals such as TITS, TKDE, DMKD, AI, KAIS, and AIIM. He is responsible for New technologies for data collection, preparation, and analysis of the Territory Aperti project and coordinator of the activities on “Responsible Data Science and Training” of PNRR SoBigData.it project, and PI of the “FAIR-EDU: Promote FAIRness in EDUcation Institutions” project. During his academic growth, he devoted much of his attention to teaching and tutoring, where he worked on more than 30 different national and international theses (of B.Sc., M.Sc., and PhD levels). In more than ten years of academia, he provided university-level courses for ten different topics and grades in the scientific field of Computer and Data Science.

Bibliography

[1] Mario Alfonso Prado-Romero, Bardh Prenkaj, Giovanni Stilo, and Fosca Giannotti. A Survey on Graph Counterfactual Explanations: Definitions, Methods, Evaluation, and Research Challenges. ACM Comput. Surv. (September 2023). https://doi.org/10.1145/3618105

[2] F. Scarselli, M. Gori, A. C. Tsoi, M. Hagenbuchner and G. Monfardini, The Graph Neural Network Model, in IEEE Transactions on Neural Networks, vol. 20, no. 1, pp. 61-80, Jan. 2009, https://doi.org/10.1109/TNN.2008.2005605

[3] Z. Wu, S. Pan, F. Chen, G. Long, C. Zhang and P. S. Yu, A Comprehensive Survey on Graph Neural Networks, in IEEE Transactions on Neural Networks and Learning Systems, vol. 32, no. 1, pp. 4-24, Jan. 2021, https://doi.org/10.1109/TNNLS.2020.2978386

[4] H. Yuan, H. Yu, S. Gui and S. Ji, Explainability in Graph Neural Networks: A Taxonomic Survey, in IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 45, no. 5, pp. 5782-5799, 1 May 2023, https://doi.org/10.1109/TPAMI.2022.3204236

[5] Ying, Z., Bourgeois, D., You, J., Zitnik, M., and Leskovec,J., 2019. Gnnexplainer: Generating explanations for graph neural networks. Advances in neural information processing systems, 32.

[6] Q. Huang, M. Yamada, Y. Tian, D. Singh and Y. Chang, GraphLIME: Local Interpretable Model Explanations for Graph Neural Networks, in IEEE Transactions on Knowledge and Data Engineering, vol. 35, no. 7, pp. 6968-6972, 1 July 2023, https://doi.org/10.1109/TKDE.2022.3187455

[7] Y. Liu, C. Chen, Y. Liu, X. Zhang and S. Xie, Multi-objective Explanations of GNN Predictions, 2021 IEEE International Conference on Data Mining (ICDM), Auckland, New Zealand, 2021, pp. 409-418, https://doi.org/10.1109/ICDM51629.2021.00052

[8] Carlo Abrate and Francesco Bonchi. 2021. Counterfactual Graphs for Explainable Classification of Brain Networks. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining (KDD ‘21). Association for Computing Machinery, New York, NY, USA, 2495–2504. https://doi.org/10.1145/3447548.3467154

[9] Bajaj, M., Chu, L., Xue, Z., Pei, J., Wang, L., Lam, P.,and Zhang, Y. Robust counterfactual explanations on graph neural networks. Advances in neural information processing systems, 34.

[10] Wellawatte GP, Seshadri A, White AD. Model agnostic generation of counterfactual explanations for molecules. Chem Sci. 2022 Feb 16;13(13):3697-3705. https://doi.org/10.1039/d1sc05259d

[11] Cai, R., Zhu, Y., Chen, X., Fang, Y., Wu, M., Qiao,J., and Hao, Z. 2022. On the Probability of Necessity and Sufficiency of Explaining Graph Neural Networks: A Lower Bound Optimization Approach. arXiv preprint arXiv:2212.07056

[12] Ana Lucic, Maartje A. Ter Hoeve, Gabriele Tolomei, Maarten De Rijke, Fabrizio Silvestri, CF-GNNExplainer: Counterfactual Explanations for Graph Neural Networks, in Proceedings of The 25th International Conference on Artificial Intelligence and Statistics, PMLR 151:4499-4511, 2022.

[13] Ma, J.; Guo, R.; Mishra, S.; Zhang, A.; and Li, J., 2022. CLEAR: Generative Counterfactual Explanations on Graphs. Advances in neural information processing systems, 35, 25895–25907.

[14] T. M. Nguyen, T. P. Quinn, T. Nguyen and T. Tran, Explaining Black Box Drug Target Prediction Through Model Agnostic Counterfactual Samples, in IEEE/ACM Transactions on Computational Biology and Bioinformatics, vol. 20, no. 2, pp. 1020-1029, 1 March-April 2023, https://doi.org/10.1109/TCBB.2022.3190266

[15] Numeroso, Danilo and Bacciu, Davide, MEG: Generating Molecular Counterfactual Explanations for Deep Graph Networks, 2021 International Joint Conference on Neural Networks (IJCNN). https://doi.org/10.1109/IJCNN52387.2021.9534266

[16] Sun, Y., Valente, A., Liu, S., & Wang, D. (2021). Preserve, Promote, or Attack? GNN Explanation via Topology Perturbation. ArXiv. https://arxiv.org/abs/2103.13944

[17] Juntao Tan, Shijie Geng, Zuohui Fu, Yingqiang Ge, Shuyuan Xu, Yunqi Li, and Yongfeng Zhang. 2022. Learning and Evaluating Graph Neural Network Explanations based on Counterfactual and Factual Reasoning. In Proceedings of the ACM Web Conference 2022 (WWW ‘22).https://doi.org/10.1145/3485447.3511948

[18] Wu, H.; Chen, W.; Xu, S.; and Xu, B. 2021. Counterfactual Supporting Facts Extraction for Explainable Medical Record Based Diagnosis with Graph Network. In Proc. of the 2021 Conf. of the North American Chapter of the Assoc. for Comp. Linguistics: Human Lang. Techs., 1942–1955. https://aclanthology.org/2021.naacl-main.156/

[19] Zexi Huang, Mert Kosan, Sourav Medya, Sayan Ranu, and Ambuj Singh. 2023. Global Counterfactual Explainer for Graph Neural Networks. In Proceedings of the Sixteenth ACM International Conference on Web Search and Data Mining (WSDM ‘23). Association for Computing Machinery, New York, NY, USA, 141–149. https://doi.org/10.1145/3539597.3570376

[20] Guidotti, R. Counterfactual explanations and how to find them: literature review and benchmarking. Data Min Knowl Disc (2022). https://doi.org/10.1007/s10618-022-00831-6